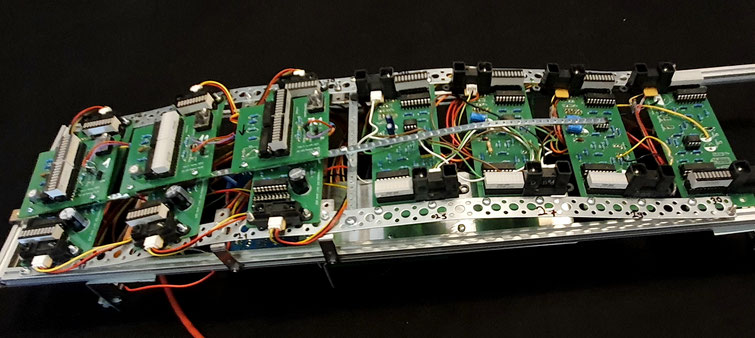

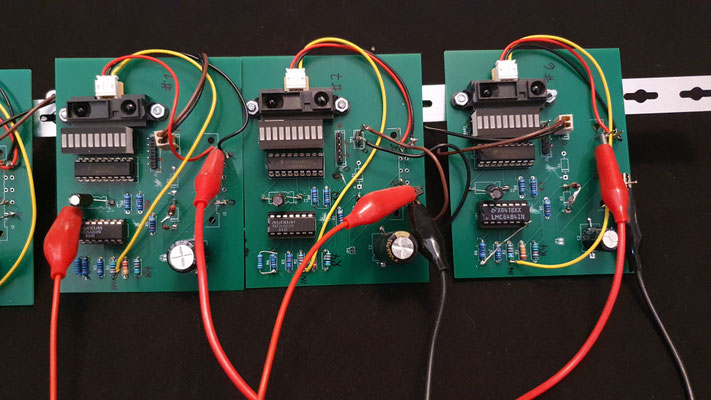

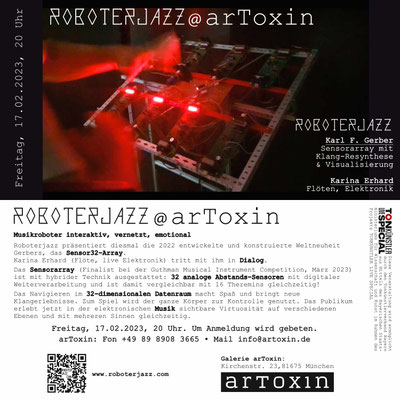

Q: Is the Sensor32 array a Theremin?

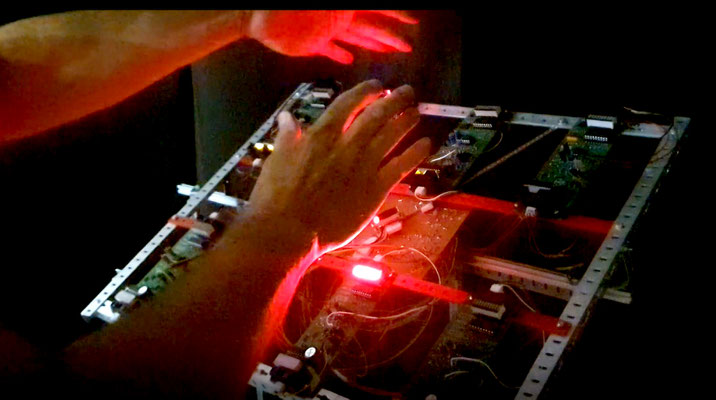

A: No, not.....In this demo video my intention was to show smooth fading without sound (avoid those misunderstandings like Theremin.....) In this video "noTheremin" Sensor32 is used exclusively to conduct 12 indvidual light bulbs, each flashing in different speed (rhythm).